End-to-End Deep Learning with Spark, Determined, and Delta Lake

August 11, 2020

To be successful with deep learning at scale, it is often necessary to transform raw training data into a format that is more suited for training deep learning models. One of the most common patterns we’ve seen is users applying batch ETL systems such as Apache Spark to preprocess their data before moving it into Determined for accelerated training. In this blog post, we dive into how you can enable a seamless transition between Spark and Determined to build end-to-end, scalable pipelines for deep learning. In particular, we’ll demonstrate how you can use:

- Distributed data preprocessing with Spark

- Data versioning with Delta Lake

- Simple interface with Determined to read and track a versioned dataset from Delta Lake

- Distributed training with Determined

- Batch inference with Spark

Check out the code for this example here.

The Value of Data Versioning

Before we get started with the code example, it’s worth reviewing why versioned datasets are so useful. As deep learning teams grow, they frequently end up with a constant stream of new data that is being labeled, relabeled, and reprocessed into new training data. Data scientists will often end up with their data, model, and hyperparameters all changing simultaneously. It can be difficult to attribute changes in model performance to any one part of that system. If you don’t have a clear understanding of exactly what data was used to train a model, understanding your model’s performance is hopeless. You’ll be flying blind when trying to make improvements (or worse, when telling your auditor what went into training a model!).

Systems like Delta Lake, Pachyderm, and DVC exist to track data that changes over time. Since Delta Lake is tightly integrated with the Spark ecosystem, we’ll use that for this example to keep track of our data versions.

Data Preprocessing with Spark

Our preprocessing goal will be to process a large image dataset (in this case images and labels stored in an S3 bucket) into a versioned dataset with Delta Lake. In this simple example, we take raw JPEG images from an S3 bucket and pack them into efficiently stored, versioned parquet records. In more complex workflows, data engineers often do things like resize images, encode features, or even use deep learning to calculate embeddings for downstream tasks. Spark makes it easy to scale these operations for quick processing of massive datasets.

Adding in data versioning ends up simple – Delta Lake just mirrors the Parquet writing format in Spark, meaning all you’ll need to do is save your table the same way you’re used to and you’ll get additional features like data versioning and ACID transactions with little-to-no extra effort. This will set us up to easily use versioned datasets with Determined.

Simple Integration with Determined

Although Spark 3.0 added first-class GPU support, most often the workloads you’ll run on Spark (e.g. ETL on a 1000-node CPU cluster) are inherently different from the demands of deep learning. If you’re serious about deep learning, you’ll need a specialized training platform, complete with all the tools you need to rapidly iterate on deep learning models.

Two of Determined’s strengths are perfect complements for the large-scale data processing and versioning provided by Spark and Delta Lake: fast distributed training and automatic experiment tracking. We can build a connector between Determined and Delta Lake that allows us to:

- Change the version of a dataset with one line in a configuration file

- Track what version of the data was used to train every model

At its core, this connector is really simple – we read metadata from Delta Lake, download the Parquet files associated with a specific version, and read them using PyArrow. In this example, we then load those files into a PyTorch Dataset, which we can easily use with Determined to train a model.

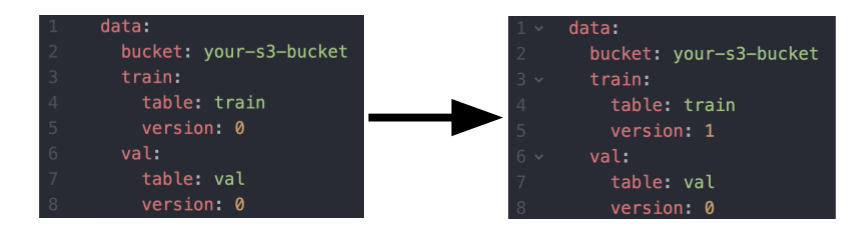

In the provided example, selecting a dataset and a version ends up as simple as changing a line in a configuration file:

Quickly Train a Model with Determined

Spark allows you to process huge datasets by leveraging efficient distributed computing techniques quickly but is largely built to accelerate CPU workloads. Determined takes over where Spark leaves off, allowing you to train deep learning models with dozens to hundreds of GPUs to greatly accelerate training. This allows you to scale your training pipeline alongside your data pipeline to continuously train models on new versions of data.

With Determined, using distributed training is dirt simple. All you need to do is configure another line in a configuration file to set how many GPUs you want to use, and you’ll be able to speed up your training with the best distributed training algorithms available.

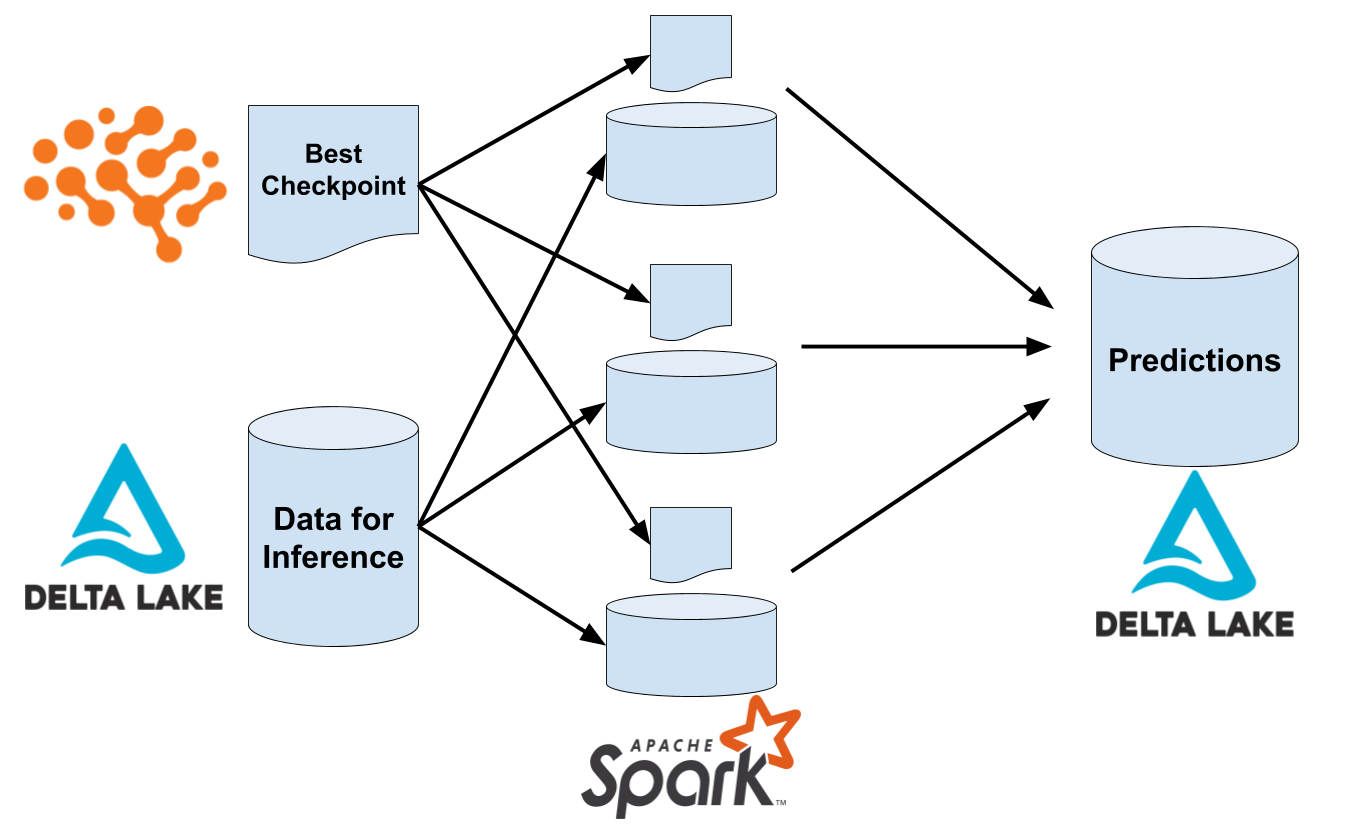

Inference with Spark and Determined

When you train a model with Determined, all of the artifacts and metrics associated with that training run are tracked and accessible programmatically. This makes it really easy for you to export a trained model out of Determined for inference. In code, this looks like:

from determined.experimental import Determined

det = Determined(master=det_master)

checkpoint = det.get_experiment(experiment_id).top_checkpoint()

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = checkpoint.load(map_location=self.device)

In order to do inference on Spark, all we need to do is broadcast this model to each of the Spark workers and apply it to our test data. In the reference inference example, we use the Determined checkpoint export API to load our model in Spark, then apply it as a Pandas UDF on new data. Spark lets us scale inference seamlessly to as many cores as we have available.

Conclusion

If you’re a Spark user looking to accelerate your deep learning workflows, you can get started with Determined here, or join our community Slack if you have any questions!