New Feature: Constrained Hyperparameter Searches

June 10, 2021

Today, we are excited to announce that we have enhanced Determined’s state-of-the-art hyperparameter search capabilities with constrained hyperparameter searches! Determined already provides best-in-class hyperparameter tuning through an integrated workflow with support for searchers like random, grid, and the state-of-the-art ASHA. However, in many research and production use cases, accuracy is not the only metric users want to optimize their models for. For instance, in deployment-constrained scenarios where model size or latency of a forward pass is paramount (imagine a robotics or an autonomous vehicle deployment), users need to find the model with the best accuracy that their hardware can support. Moreover, ML researchers may already have some existing knowledge of their hyperparameter search space that they would want to embed into their search to weed out poorer-performing hyperparameter configurations.

Why Constrained Searches Matter

With Determined’s new constrained hyperparameter searches, we make it easy for users to programmatically constrain hyperparameter search spaces in custom ways. This tool allows users to embed their pre-existing knowledge into hyperparameter searches (i.e. ensuring a reasonable model size, limiting the number of skip connections, etc.), thereby saving hardware resources and training costs. Below, we will describe in detail how Determined’s constrained hyperparameter searches work and showcase results using hyperparameter search constraints over the NAS CNN search benchmark, an established benchmark for neural architecture search.

Determined enables users to specify hyperparameter values and ranges directly in the experiment configuration, thereby allowing them the flexibility of not having to change their core model code to run hyperparameter tuning workloads. With Determined’s custom constrained hyperparameter searches, users need only tell the Determined searcher that a specified configuration of hyperparameters in a Determined trial is invalid. This is done in the form of raising a determined.InvalidHP() exception triggered in the model code.

In the example below, derived from the model_def.py file in our NAS CNN search benchmark experiment, the apply_constraints function shows how we can enforce constraints over hyperparameters from the experiment configuration by raising determined.InvalidHP() when a constraint is invalidated. This exception can be triggered at any time during trial execution, e.g. when it is initialized in the init function, or during a training or evaluation step. This feature enables users to either specify configurations they do not want to try during the __init__() function or specify training/evaluation metrics that invalidate a given hyperparameter configuration. When triggered, the determined.InvalidHP() call recognizes that the given trial has an invalid hyperparameter configuration and proceeds to gracefully terminate the trial with the user-specified message. For more details on how specific searchers handle constrained hyperparameter searches with Determined, see the reference documentation here.

import determined as det

def apply_constraints(hparams, num_params):

normal_skip_count = 0

reduce_skip_count = 0

normal_conv_count = 0

for hp, val in hparams.items():

if val == "skip_connect":

if "normal" in hp:

normal_skip_count += 1

elif "reduce" in hp:

reduce_skip_count += 1

if val == "sep_conv_3x3":

if "normal" in hp:

normal_conv_count += 1

# Reject if num skip_connect >= 3 or <1 in either normal or reduce cell.

if normal_skip_count >= 3 or reduce_skip_count >= 3:

raise det.InvalidHP("too many skip_connect operations")

if normal_skip_count == 0 or reduce_skip_count == 0:

raise det.InvalidHP("too few skip_connect operations")

# Reject if fewer than 3 sep_conv_3x3 in normal cell.

if normal_conv_count < 3:

raise det.InvalidHP("fewer than 3 sep_conv_3x3 operations in normal cell")

# Reject if num_params > 4.5 million or < 2.5 million.

if num_params < 2.5e6 or num_params > 4.5e6:

raise det.InvalidHP(

"number of parameters in architecture is not between 2.5 and 4.5 million"

)

Example

To test the capabilities of this new feature, we test it on the DARTS CNN search space, a common search space used for neural architecture search (NAS). We use the following hyperparameter constraints (see the above code snippet) motivated by domain knowledge about this search space:

- Too many skip connections are known to lead to performance degradation (Liang et al. 2019) so we limit the number of

skip_connectto between 0 and 3 for both normal and reduce cells. - Top-performing architectures in the DARTS search space have many separable convolution operations (cf. Liu et al., 2018 and Xu et al., 2019) so we require that the number of

sep_conv >= 3in normal cells. - Finally, we limit the size of the network to between 2.5M and 4.5M parameters. This can be tuned for particular deployment scenarios.

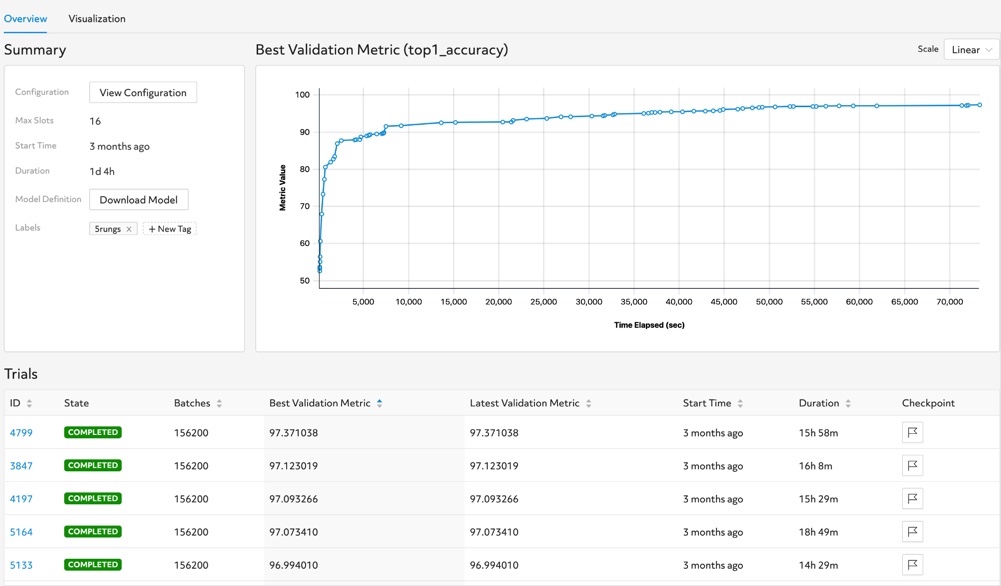

Using these constraints, we are able to achieve the results below as plotted in the Determined Web UI. The best architecture after searching 1000 hyperparameter settings with ASHA reached a validation accuracy of 97.37% in 300 epochs. For a fair comparison to published results, we trained the discovered architecture from scratch for 600 epochs and averaged the accuracy across 10 random seeds. The best architecture under this evaluation scheme reached a validation accuracy of 97.48% vs. 97.50% for our recent state-of-the-art NAS method GAEA, an impressive result given the simplicity of constrained random search with early-stopping.

Try Constrained Searches Out For Yourself!

All of the source code used in achieving these results can be found here with the relevant documentation here, and we encourage you to try it out! You can find support for hyperparameter search constraints in Determined version 0.14.5 and above. If you have any questions or suggestions, please join our community Slack or visit our GitHub repository – we’d love to help!