Stop doing iterative model development

May 20, 2019

Imagine a world in which gradient descent or second-order methods have not yet been invented, and the only way to train machine learning models is to tune their weights by hand. Professors around the country would deploy armies of students to find optimal models by “graduate student descent.” Silos of interns would be put to work slaving through parameter values to find the magic combination that will maximize profits. This alternate reality seems wildly inefficient and expensive compared to standard machine learning practices today. So then why are so many engineers taking this approach to model development?

At Determined AI, we work closely with data scientists and machine learning engineers across many industries to improve the productivity of the machine learning model development lifecycle. Our customers have spent many cycles on iterative model development: manual, adhoc experimentation to improve a model’s performance over an established baseline. We’ve seen them labor for weeks, months, and sometimes years to improve model performance—in many cases, this is an engineer’s entire job. In this post, we introduce search-driven model development, a new paradigm that can reproduce the results from months of iterative work in 24 hours.

A concrete example

Megan is a machine learning engineer at a large advertising company working on models that aim to optimize the cost per click (CPC). One day, she has an idea for improving her team’s model that she would like to test: a new user behavior event that her company recently started tracking could be used as an input feature to the model and improve CPC performance. Using the current model in production as a baseline, Megan tests her intuition:

- She modifies the feature generation module to include the new feature in each training instance.

- She modifies the model training pipeline to use the new input feature when training the model.

- She trains two models, with and without the new feature enabled, respectively.

- She compares the results of the two experiments using their performance on an appropriate validation metric.

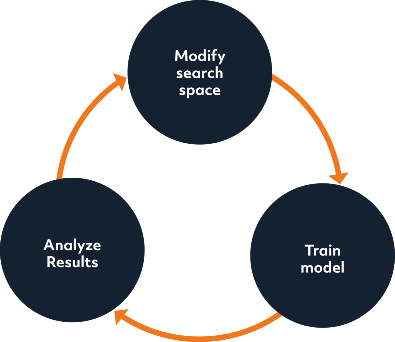

Seems simple and intuitive, right? After all, Megan is following the time-honored scientific method that scientists have used to make progress for centuries—pick a hypothesis, test that hypothesis, adjust your hypothesis, and repeat. Megan is using the iterative model development workflow—code, train, analyze, and repeat.

Megan’s process is iterative, meaning the final outcome of her work (the “best” model) will be decided after repeated tests. One way of looking at this problem is to think of each iteration decision as a hyperparameter to set/optimize. In machine learning, a hyperparameter is defined as “a parameter whose value is set before the learning process begins.” For example, the decision to include Megan’s new feature is a discrete hyperparameter with two states: “Yes” or “No”. In fact, every decision you make during model development is a hyperparameter. Yes, hyperparameters are more than just learning rates and batch sizes. Here are some examples:

- The subset of input features you decide to include in your model.

- How you decide to preprocess your data (e.g. adding random augmentation).

- The seed you are using to shuffle your input batches, if any.

- The type of model you decide to use (e.g. Neural Networks vs. GBDTs).

- The optimization procedure you decide to use.

- How many training batches you decide to use to train.

- What type of early stopping procedure you decide to use, if any.

Framing the design process this way typically results in three problems.

Problem 1: Iterative model development is a greedy approach that can lead to suboptimal decisions.

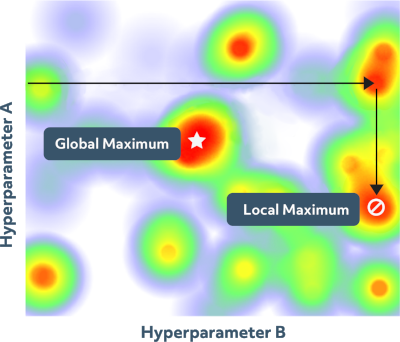

Hyperparameters rarely behave independently with respect to optimizing the final validation metric, and they often interact in unpredictable ways. Consider the following two-dimensional heatmap representing model validation performance at different values of two distinct hyperparameters. Searching this space while only varying a single hyperparameter at a time corresponds to moving along a single axis. As shown in Figure 1, this often results in a suboptimal final result.

Due to the curse of dimensionality, this problem only gets worse as you increase the dimensions of hyperparameters you are exploring.

Problem 2: The iterative model development cycle is expensive.

Iterative model development is expensive in terms of computational resources, but more importantly, it consumes considerable human resources. Given the highly competitive market for talent in the machine learning engineering space, your time as a model developer may be one of the most precious resources available to your team. Let’s take another look at the tasks Megan has to perform for a cycle of iterative model development:

- She modifies the feature generation module to introduce the new input feature.

- She modifies the model training pipeline to use the new input feature when training the model.

- She trains two models: one with the input feature enabled and one without the input feature enabled.

- She compares the results of the two experiments on the validation metrics.

Steps 1 and 2 require careful design thinking, software development, and/or unit testing—all non-trivial tasks that are a good use of Megan’s time. Steps 3 and 4, however, are probably a waste of Megan’s time. The mechanics behind kicking off a model training job, babysitting that job, and comparing the result set of well-defined validation metrics could easily be automated.

Problem 3: The iterative model development cycle is sequential.

Iterative model development is fundamentally limited as a sequential process. As a thought exercise, let’s imagine you had 10 engineers that could work on the same modeling problem, each testing a different hyperparameter. Would it make sense to have each engineer develop their own branch of iterative model development? Probably not, because they would quickly find themselves running into conflicting conclusions that would be difficult to merge into a final result.

Search-Driven Model Development

A search-driven approach is a solution to the problems inherent in iterative model development. Here are three guiding principles you can use to achieve better results faster:

Develop a search space instead of developing a model

One way to get away from iterative model development is to get into the practice of developing search spaces as opposed to developing models. Most modeling decisions made in code should be easily toggleable through a command-line flag or a configuration file. When making changes to the model training codebase, aim to add dimensions to the search space instead of hardcoding those changes. For example, Megan could add a command-line flag (--enable-feature-a) to enable experimentation with her new feature. Or, she could develop a custom configuration format that makes it simple to declare the features associated with a training invocation.

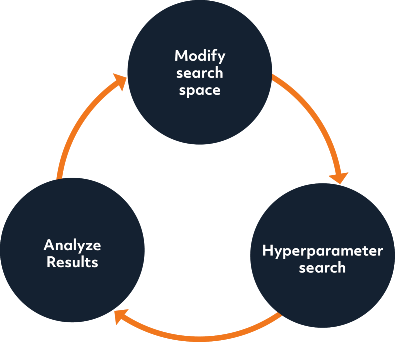

Run hyperparameter searches as opposed to training single models

The field of exploring a modeling search space, or hyperparameter optimization, has been heavily studied in academia. Automated methods such as adaptive resource allocation and Bayesian optimization have been shown to be both more accurate and efficient than manual selection of hyperparameters. Although these methods are often applied to narrow sets of the “obvious” hyperparameters (such as learning rate and batch size), model developers sometimes fail to connect these methods to the full search space they’re exploring. Don’t fall into this trap! Everything is a hyperparameter.

Get out of the mindset that your hyperparameters should be “tuned” after you have decided on a modeling approach. Hyperparameter search and exploring a search space of modeling decisions are one and the same. As soon as you have encoded your intuition into a search space, run experiments exploring that search space. Your search space will naturally contract and expand—perhaps you add new features, new model architectures, or new optimizers along the way. But, crucially, you’re always considering the search space as a whole as opposed to individual model instantiations.

Get your infrastructure tooling right

When shifting the development paradigm to exploring search spaces as opposed to training models, it is essential to have great infrastructure tooling that supports doing this in an efficient way. You’ll need tools to automate scheduling for these training jobs, especially if you’re exploring hundreds or thousands of models at a time. You’ll also need tools to help manage the huge amount of metadata generated from these experiments: metrics, hyperparameters, logs, and more. Finally, when exploring sufficiently large search spaces, random search or grid search is probably too computationally intensive to be effective—you’ll need to use more efficient methods of exploring search spaces such as Hyperband or Population based training. At Determined AI we’ve built a comprehensive platform to solve all of these challenges and more.

An addendum on sizing search spaces

Ambitious engineers may take this solution to the extreme and ask, ”Why define a search space at all when I could use the space of all possible models?” Unfortunately, exploring the giant search space of all possible models is prohibitively expensive if you don’t have the resources of a tech giant behind you. In addition, as a machine learning practitioner, you may have some intuition around a problem you’re tackling. For example, you may know that convolutional neural network architectures tend to perform well on computer vision problems, or that recurrent neural network architectures tend to perform well on time series problems.

Encoding your intuitions into well-designed search spaces will significantly reduce the complexity of finding a good point in that search space. In deep learning, the branch of research focused on exploring search space efficiently and accurately is called Neural Architecture Search (NAS). While the research is still in its early phases, all of the leading NAS methods use carefully defined search spaces specific to their task to get great results (e.g. CNN search spaces for vision tasks).

Interested in improving the productivity of your team’s model development process? We’d love to help—drop us a message to get started.