Blogs

Weekly updates from our team on topics like large-scale deep learning training, cloud GPU infrastructure, hyperparameter tuning, and more.

OCT 25, 2023

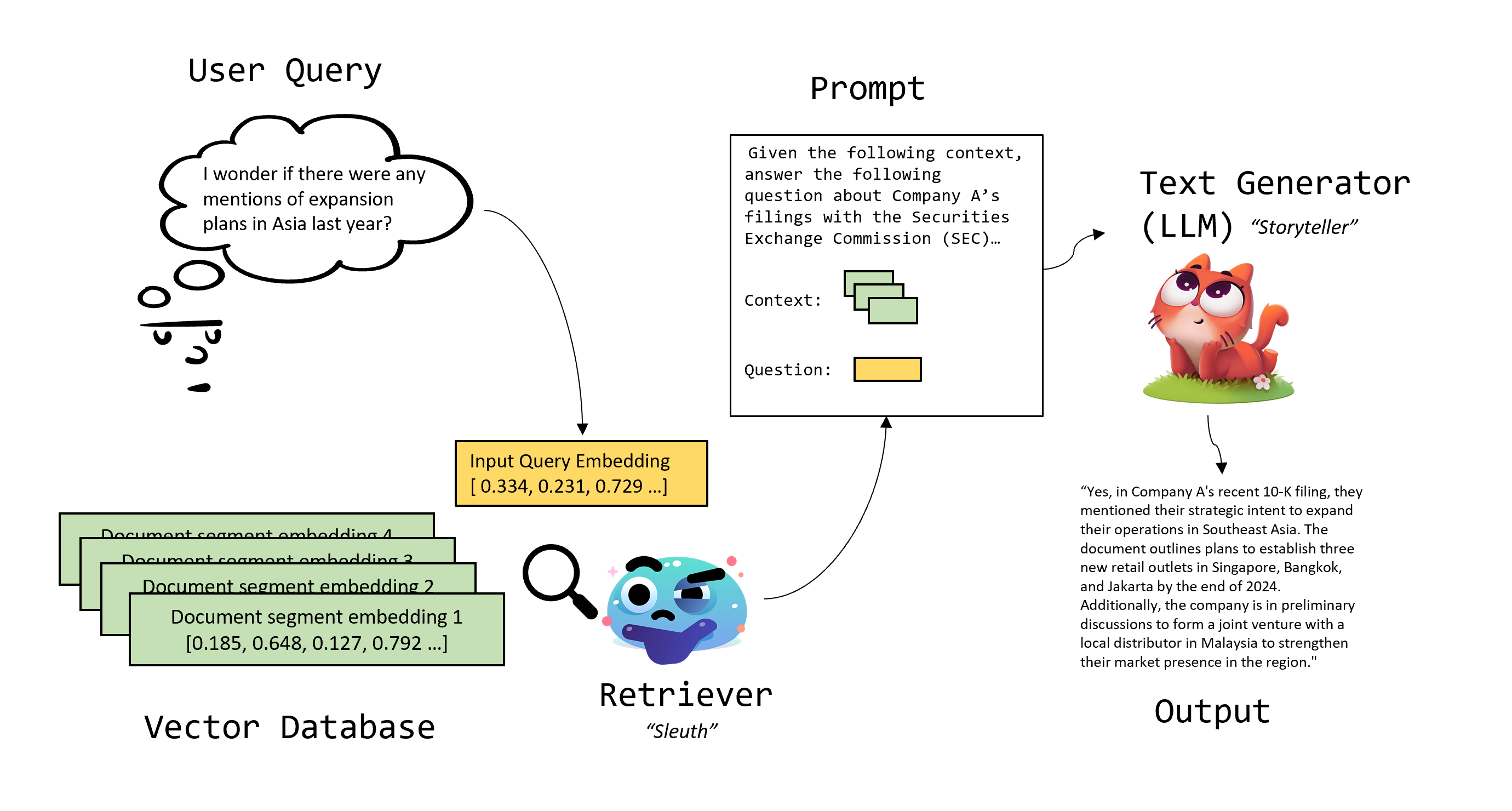

The Sleuth and the Storyteller: The Dynamic Duo behind RAG

A Quick Overview of Retrieval Augmented Generation (RAGs).

OCT 23, 2023

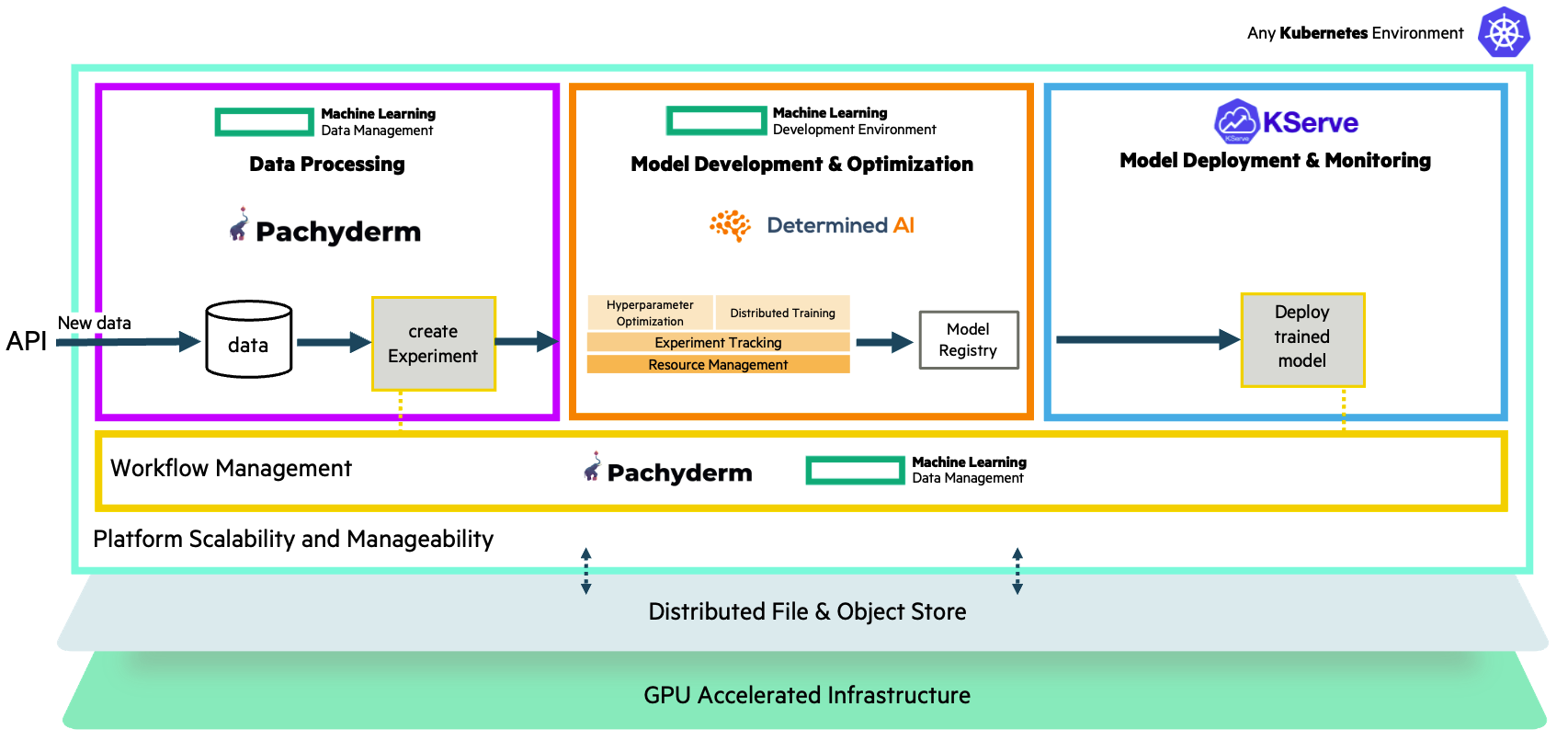

New video demo: End-to-end MLOps using Pachyderm, Determined, and KServe

Learn how the PDK stack works at a high level.

OCT 11, 2023

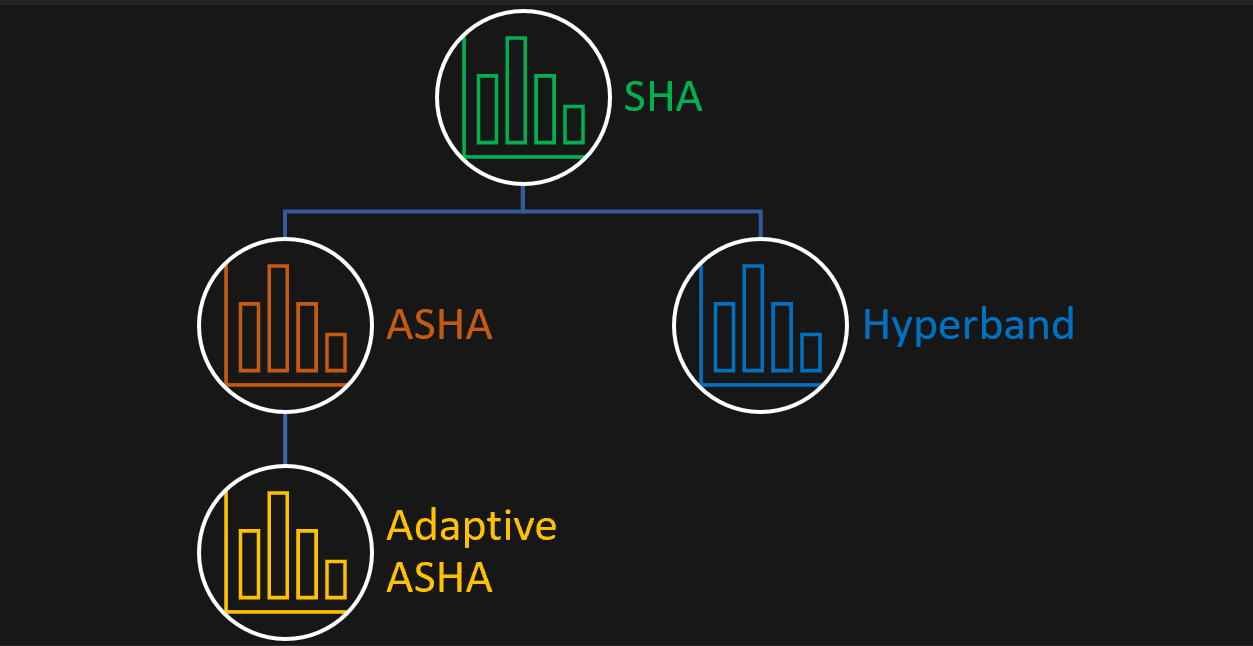

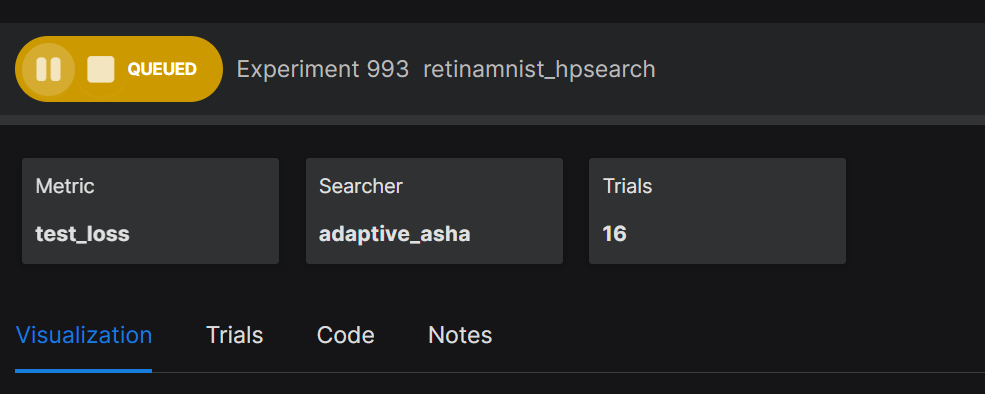

Adaptive ASHA Explained using America’s Got Talent

A state of the art hyperparameter optimization algorithm explained using a fun analogy.

OCT 04, 2023

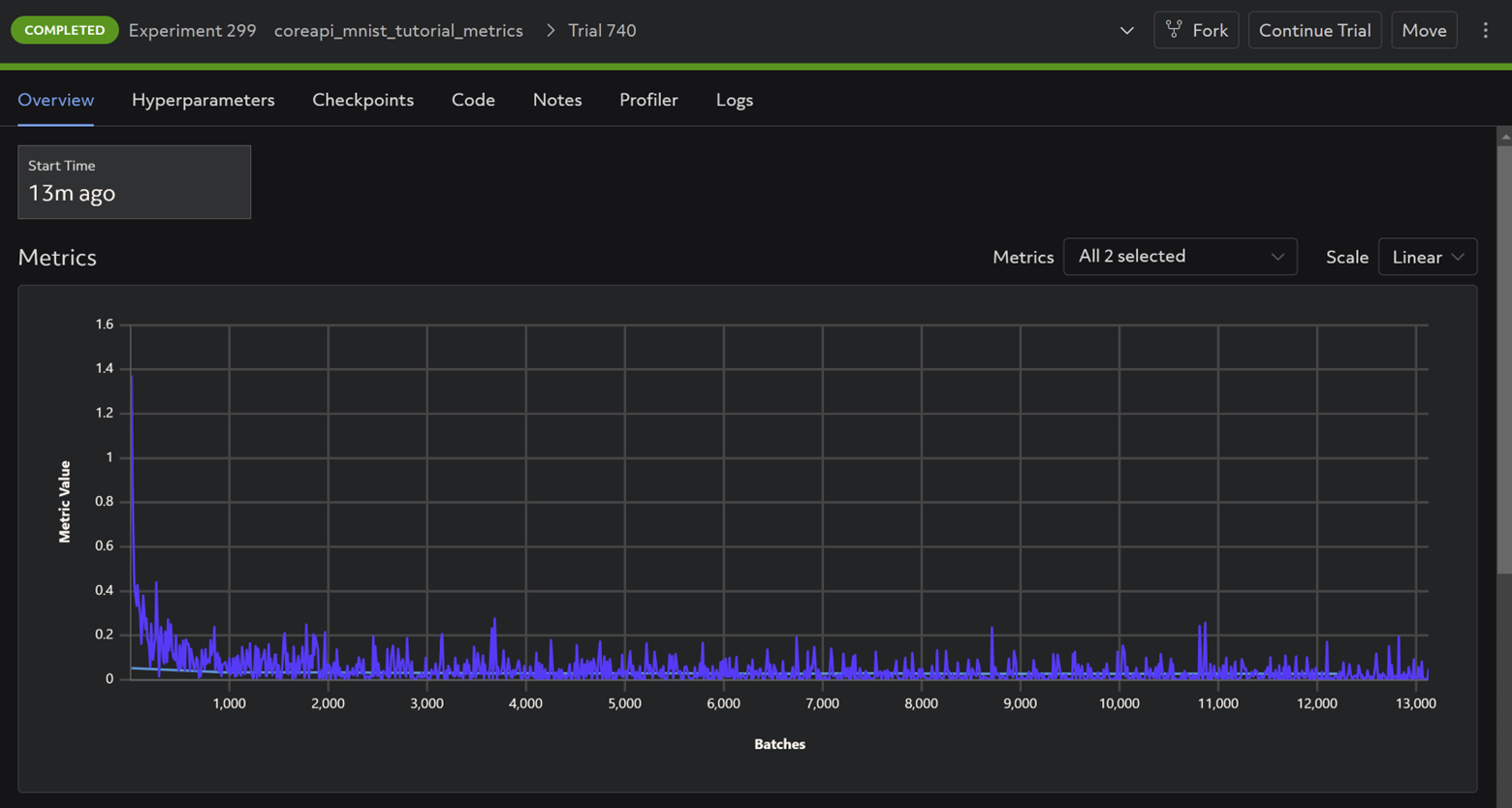

Use Batch Inference in Determined Part 2: Validate Trained Models with Metrics

New functionality for evaluating models outside of training jobs!

OCT 02, 2023

New Video Walkthrough: Installing Determined on macOS

Learn how to install Determined on macOS, to run machine learning experiments locally on your MacBook.

SEP 26, 2023

Optimizing Workloads: The Preemption Puzzle Solved by Determined AI

A feature we don’t talk about enough is actually one of the hidden gems of Determined.

SEP 18, 2023

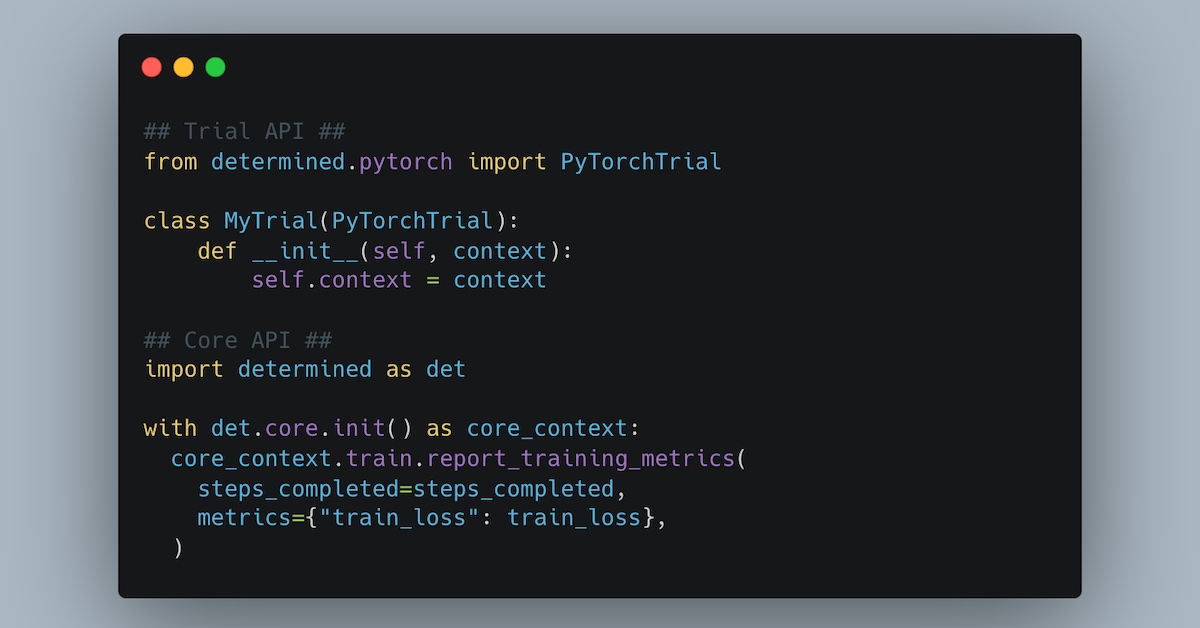

Iterate on your code locally using the PyTorch Trainer API

Streamlining your PyTorch workflow: from local iteration to cluster deployment

SEP 11, 2023

Detached Mode: Log metrics in Determined for workloads run anywhere

Introducing a new, flexible way to log metrics with Determined. Available in versions 0.25.0 and up.

SEP 01, 2023

Transfer Learning Made Easy with Determined

Transform your research workflow by using our Trial APIs!